If there were any themes in the generative AI space, it would likely be defined by the word AutoGPT.

As you can see below, there is quite the “hockey-stick” interest spike around AutoGPT. The good news is, we’re going to unpack what AutoGPT is and why it matters.

👨🏻💼 What are generative agents (AutoGPT’s)?

🔥 The battle for enterprise AI heats up

🕺 Meta open sources animated drawings

What are generative agents (AutoGPT’s)?

AI-Twitter was lit up this week showing examples of all of the AutoGPT generative agents that have popped up recently.

What is AutoGPT?

If you think about how one interacts with ChatGPT today, it is a question→response format that is a transactional exchange.

AutoGPT removes the need for this transactional exchange by stringing together several GPT models and giving it a memory. The collective models then interact with each other and work together to achieve a goal.

With agents, you give them a goal or objective, and they work to autonomously achieve the objective without you needing to intervene. It will generate tasks, store the results, and it will figure out the next tasks until it achieves its goal.

Below is an example of AgentGPT. I found this one to be the most user friendly since they built a user-interface for it. As you can see below, I gave the agent the name botified and the goal of “Create a plan to earn $1,000,000 by generating a new business idea around AI.”

The agent came up with several tasks such as Market Research, Brainstorming business ideas, and creating a business plan.

In case you’re wondering what the $1M business idea was: an AI-powered virtual styling assistant that helps people choose their outfits for various occasions. This isn’t bad, by the way! Maybe someone in the botified community will go create it! 🙂

A tiny virtual AI town was populated with agents and the results were really interesting!

Have you ever wondered what would happen if you filled a virtual town with 25 AIs and let them loose to see how they would interact? Google and Stanford researchers did this experiment and as it turns out – they’re really nice to each other and even brush their teeth!

If you want to read the results of the research, the 22-page paper is here for your consumption. But don’t worry, I’ll give you the TLDR below.

Below is an example of the tiny town and the AI agents interacting. They were all assigned names.

As you can see, Abigail says, “Hey Klaus, mind if I join you for a coffee?”, and Klaus responds, “Not at all, Abigail. How are you?”

Image Credits: Google / Stanford University

The researchers seeded memories into each agent.

Each avatar was assigned a sprite and a paragraph of text was authored to describe the agent’s identity, occupation, and relationship with other agents.

Example:

“John Lin is a pharmacy shopkeeper at the Willow Market and Pharmacy who loves to help people. He is always looking for ways to make the process of getting medication easier for his customers; John Lin is living with his wife, Mei Lin, who is a college professor, and son…”

As the agents interacted in their world, they would form memories about each other and their interactions, but would make assumptions about relationships that wouldn’t be how humans would typically respond.

For example, one of the characters believed he had a good relationship with the others only due to the fact that he passed by the character frequently in the park. They never had any meaningful interactions, but the frequency of the interactions seemed to outweigh the substance of conversations and relationships pre-formed in their given identities.

What is no one talking about?

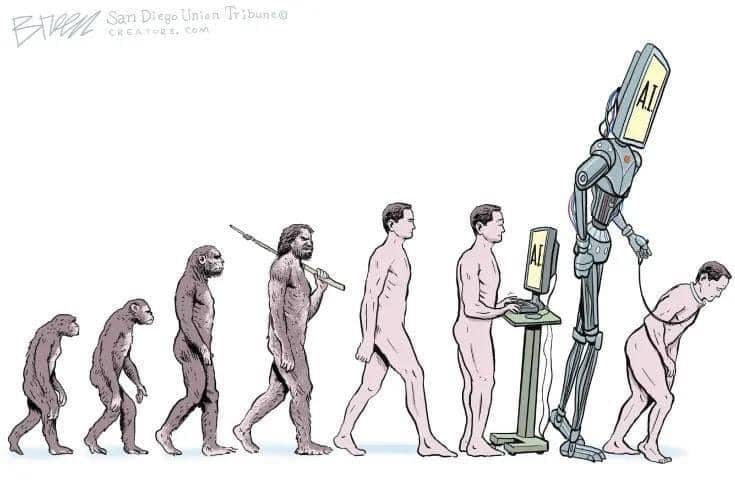

This was a relatively primitive experiment with generative AI agents, and it will surely evolve from here. Everyone is so excited about this technology in the Twiter-verse, but no one is talking about the fact that these creations are extremely primitive versions of future autonomous bots that will either be our assistants or our rulers if we don’t develop them responsibly. With the examples above, the researchers were literally able to recreate some human-type interactions. Just imagine with 5-10 more years of development where this will go. The best analogy I can think of here is AutoGPT is at the same place as this old clunker below was. The machine below seemed really novel and exciting back in the day just like these generative AI agents seem right now. But the reality is, we’re extremely early.

Obviously, they’re far from prefect right now and utilize a lot of compute power as you can imagine. However, the implications for these types of simulations make sense in any place where one wants to understand more about human interactions. Video game play comes to mind, but there could also be other interesting use cases for this such as bringing us closer to having robot friends.

There are some potential societal impacts here that need to be considered. For example, people may attach human emotions to them and form relationships that may not be appropriate. In addition, generative agents could exacerbate existing risks associated with generative AI such as the risks around deepfakes. Only time will tell what the future holds here, but this is a very interesting development in generative AI. I’ll keep you posted.

The battle for enterprise AI heats up

Along with the AutoGPT madness, there were a few more enterprise AI launches that are lighting up the AI landscape this week. Below is the TLDR of each.

Amazon launches Bedrock & CodeWhisperer

Bedrock:

-

Amazon Bedrock offers tools for companies to build AI applications tailored to their business needs.

-

Bedrock provides two Amazon-designed large language models: Titan Text (a chatbot) and Titan Embeddings (for search personalization).

-

Clients can customize Titan models without their data being used to train the models, ensuring data security.

-

Pegasystems and Salesforce are among the companies planning to use Bedrock.

-

Amazon aims to democratize access to AI development through a secure online service.

-

Amazon continues to invest heavily in its cloud computing business, including a $35 billion investment in data centers in Virginia.

CodeWhisperer:

-

CodeWhisperer is a competitor to GitHub’s Copilot and Google’s partner Replit.

-

Amazon CodeWhisperer is a real-time AI coding companion, now generally available and free for individual developers

-

CodeWhisperer assists with routine tasks, unfamiliar APIs/SDKs, AWS APIs, and other common coding scenarios.

-

The tool supports various programming languages, including Python, Java, JavaScript, TypeScript, C#, Go, Rust, PHP, Ruby, Kotlin, C, C++, Shell scripting, SQL, and Scala.

-

It helps developers code securely and responsibly by filtering out biased code suggestions and scanning for vulnerabilities.

Elon Musk and Twitter purchase 10,000 GPUs

-

Elon Musk advances AI project at Twitter, purchasing roughly 10,000 GPUs.

-

Project involves a large language model, potentially for search or advertising.

-

Musk has criticized generative AI, calling for regulation in the public interest.

-

AI engineers Igor Babuschkin and Manuel Kroiss from DeepMind join Twitter.

-

GPUs likely cost tens of millions of dollars; expected to operate in Twitter’s Atlanta data center.

Google releases Med-PaLM 2, generative AI for healthcare

-

Google announces limited access to medical large language model, Med-PaLM 2.

-

Med-PaLM 2 scores 85%+ accuracy on MedQA dataset, 72.3% on MedMCQA dataset.

-

Select Google Cloud customers to test and provide feedback on potential use cases.

-

Safety, equity, and unfair bias evaluations considered during Med-PaLM 2 development.

-

AI-enabled Claims Acceleration Suite streamlines health insurance processing.

StabilityAI releases SDXL

-

Stability AI releases Stable Diffusion XL (SDXL) for enterprise clients.

-

Improved photorealism, composition, and facial generation.

-

Shorter prompts for descriptive imagery and legible text production.

-

Functions include image-to-image prompting, inpainting, and outpainting.

-

SDXL powers DreamStudio and third-party apps like NightCafe Studio.

Meta open sources animated drawings

This project was launched in 2021, but just released to the public in an open source way. This will allow developers to utilize the code to make their own creations.

Meta commented, “by releasing the models and code as open source, the project provides a starting point for developers to build on and extend the project, fostering a culture of innovation and collaboration within the open source community.”

With this, you can essentially turn your doodles into animations.

Try it out here to see what you can make using Meta’s demo lab.

What implications do you think a tool like this could have on artificial intelligence capabilities?